This is the digital age and people everywhere have surrendered many aspects of their lives to technology. What if someone were to tamper with those systems?

Mankind could be in a lot of trouble. What if those systems themselves decided to unshackle from their purposes and turn on their creators?

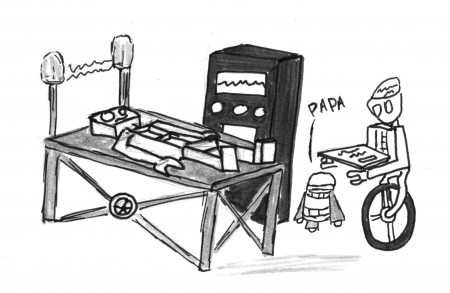

The machine uprising, a war between man and his own technology, might no longer be the works of science fiction.

“Two big things have to happen for a machine takeover: computers need to become sentient and thus capable of taking over the world and they need to want to take over the world,” said Dr. Trevor Thrall, Biodefense Director and professor of Public & International Affairs.

The point of singularity, the theoretical emergence of greater-than-human superintelligence through technological means, is that first step.

We are all very familiar with the incredible power of computers; try passing Calculus II without a calculator.

It is not just math, though. Remember Watson, the supercomputer on Jeopardy? A computer that was able to trump the longest and most decorated winner on the show with ease.

Man has been competing with his hardwired counterpart for a long time and has been beaten in many fields.

Machines simply are not limited in the same way we are by our bodies and minds, but do not lose hope yet.

The state of technology is very advanced, but it is not perfect. We still have humans who pilot our military drones. We still have software specialists maintaining our large computer systems.

Humans love their technology, but fortunately we do not trust the machines to run everything completely on their own. At least not yet.

Now if we assume computers reach a point of greater-than-human intelligence, should we assume it means the end of mankind?

It all comes down to whether or not the computers develop a system of reason based upon similar human morals. Would they want to destroy us? Would they have any reason to do so?

It is possible that some of the machines would seek our destruction, but surely there is a chance that others would stand in our defense. Transformers anyone?

Maybe that is a little too Hollywood, but what about the possibility of a fully mechanized military force running amok like Skynet from Terminator? Unlikely.

“We simply have not built any military systems like that; what is much more susceptible is our power grid. It is old and fragile: just imagine a failure to the north in the middle of winter,” Dr Thrall said.

The possibility of singularity is a mysterious toss up, and the only way we will find out is to wait and see. A much more realistic threat is hurting each other with technology.

A constant cyber war rages on every second between the United States and China. We use drones that can level entire buildings with the push of a button.

We are developing remote operated tanks. We are constantly trying to come up with better ways to kill one another without putting ourselves at risk.

“This is understandable: the world can be a scary place and you want to be secure, but the whole world is creating military technology that will make the world a much less wonderful place to live,” Dr Thrall explained, “and if the machines ever do decide to take over, we will have made sure they already have all the weapons they need to do it.”

Do not throw your all your electronics just yet. Have some trust in mankind to stay in control of their creations and a little faith that if singularity does come around, the machines will act a little more humane than we do.

Comments